Success Stories

Creating Einstein's Voice

In a partnership with Digital Human creators UneeQ we brought Albert Einstein's voice back to life. Here is how we did it.

Matt Lehmann, COO

October 18, 2023

hy do we like movies? There are many answers this question but one aspect of it is that they lead you into worlds you normally don’t have easy access to. In those worlds, you can watch Vikings fight, astronauts travel to different planets, or witness the pep talk of the sports team manager in a locker room before the team triumphantly wins over their opponents.

Often, the characters in movies are historical figures, such as Ragnar Lothbrok (Travis Fimmel) or Neil Armstrong (Ryan Gosling). Not only do they tell us about their great, inspiring stories; we also learn about them as people.

Unfortunately, in real life, it is not so easy. Sure, there are documentaries re-enacting historical events with actors, but this is still a fully one-directional experience - we can’t interact with them.

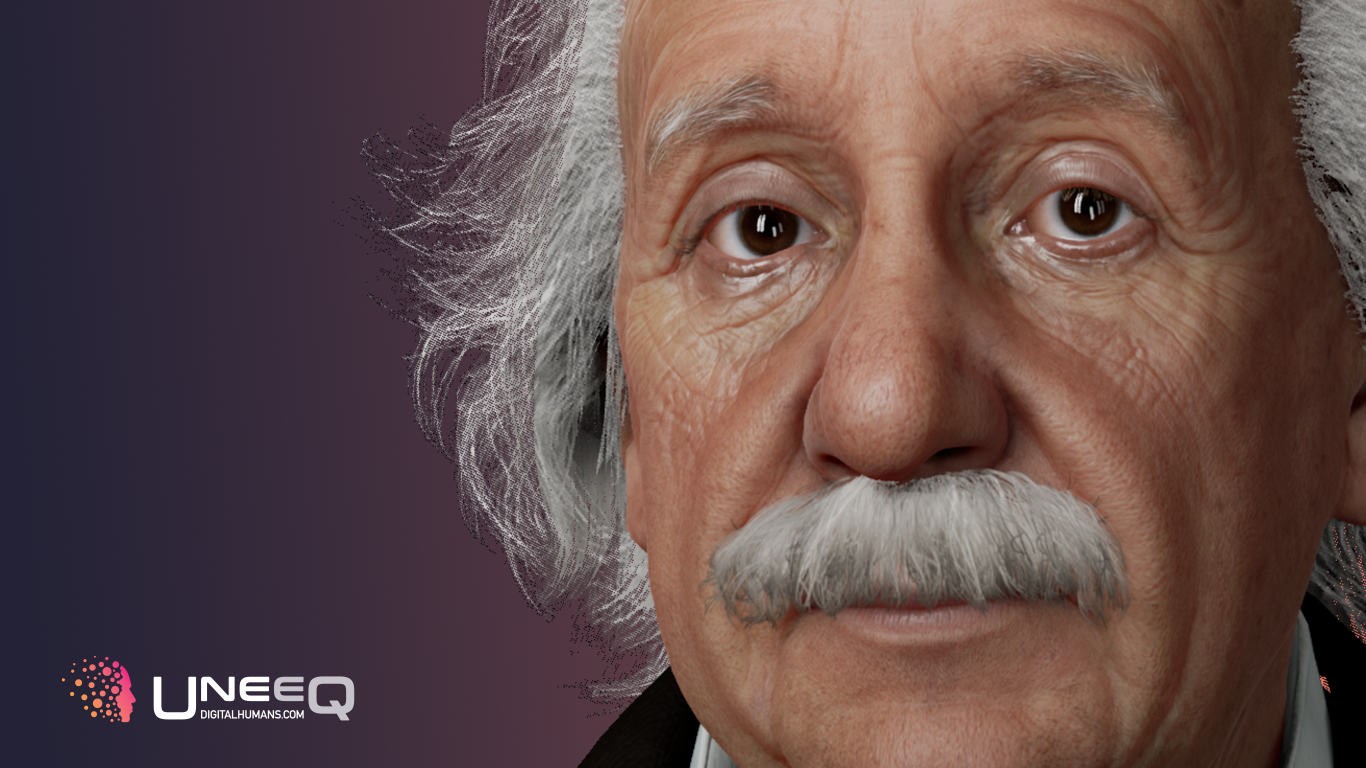

Now, for the first time, this has changed. Together with partners UneeQ, a digital humans company based in the US and New Zealand, Aflorithmic has created a digital version of Albert Einstein.

Wouldn’t it be an absolute game changer if people could learn about basic concepts of physics from the genius himself? And what if you could ask him any question about himself, his world views or anything else and would receive an immediate answer?

For some time now, UneeQ has been working on creating digital humans you can successfully have a conversation with. For this particular proof of concept, they chose Albert Einstein because he ticks all of the boxes: a widely know figure, a true genius, very interested in technology and a lot of people would actually like to talk to him.

Using UneeQ's top-notch character rendering for the realistic looks and an advanced computational knowledge engine as a brain to bring Einstein back to life, the experience is artificial intelligence at its best!

But how did the real Einstein sound like?

There was only one thing missing: a convincing voice able to answer any question and that sounds like Einstein.

But what did the real Einstein sound like? The answer is: most people don’t know and they likely don’t even care. What they do care about is being able to understand him easily, and that they feel like they are interacting with Einstein. In other words they have a somewhat clear image in their head of what they expect, but that doesn’t necessarily represent reality.

Based on historical recordings we gathered information on what makes Einstein Einstein. What we found was:

He had a very thick German accent

His pitch was rather high

He spoke slowly, wisely, and sounded friendly

However, there was a problem: the recordings of Einstein are of low quality, his accent is too thick to easily understand, and the number of recordings is very limited. In order to clone a voice, this is pretty bad news because using the data available would have led to very poor results.

This is where we go back to our movie analogy from the beginning: taking the input above, we designed a voice character that people would recognise as Einstein. We would make him in a way that he would be easy for audiences to understand, like how actors in historical movies speak modern English.

The result is a voice character that is based on the character traits we expect when we think of Einstein. He has a German accent, speaks in a friendly and slightly strange manner and he is reflective about his role in life. He also has a dry sense of humour, which is represented in the way he speaks.

However, from a technological point of view, there was one more thing that was key in order to make Digital Einstein work: he had to be able to reply in a very short amount of time so that the experience would actually feel like a conversation. In order to do this, the turnaround of the input text from the computational knowledge engine to Aflorithmic’s API would have to be rendered with Einstein’s voice in near-real-time.

After giving all we had to solve this challenge we got the response time down from 12 seconds to under 3 seconds, an improvement of 400% realised in only 2 weeks!

It really shows the way to what conversational AI can be and goes far beyond chatbots or customer service and Voice cloning plays a major role in it.

Being a part of this project was a great experience. It really shows the way to what conversational AI can be and goes far beyond chatbots or customer service: Education can become more engaging and comprehensive, social commerce will help customers find the product that best fits their needs and even loneliness and therapy could be revolutionised by conversational AI. Voice cloning plays a major role in it.

About: AudioStack is a London/Barcelona-based technology company. Its platform enables fully automated, scalable audio production by using synthetic media, voice cloning, and audio mastering, to then deliver it on any device, such as websites, mobile apps, or smart speakers. With this Audio-As-A-Service, anybody can create beautiful sounding audio, starting from a simple text to including music and complex audio engineering without any previous experience required. The team consists of highly skilled specialists in machine learning, software development, voice synthesizing, AI research, audio engineering, and product development.

About AudioStack

AudioStack is the world's leading end-to-end enterprise solution for AI audio production. Our proprietary technology connects AI-powered media creation forms such as AI script generation, text-to-speech, speech-to-speech, generative music, and dynamic versioning. AudioStack unlocks cost and time-efficient audio that is addressable at scale, without compromising on quality.